Error, bias, variance, and the human condition

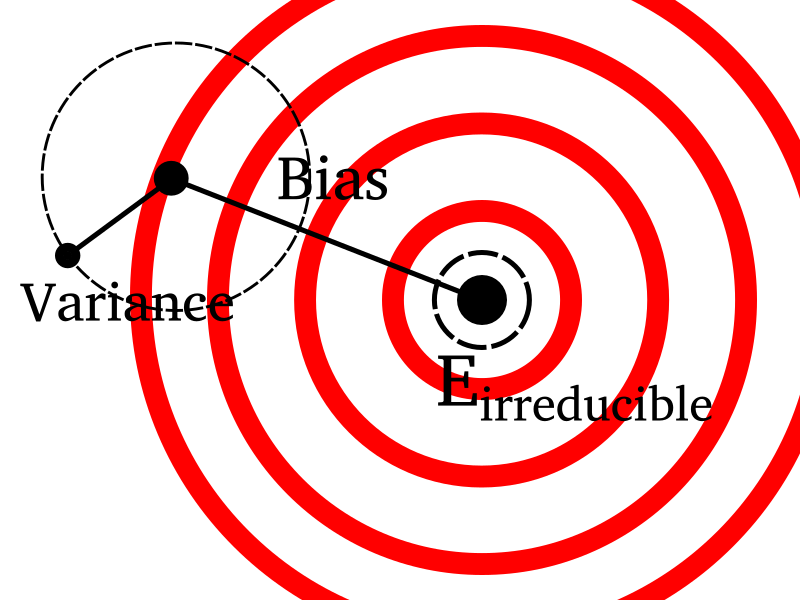

Error = Irreducible Error + Bias + Variance

A fundamentally important concept from machine learning and statistics is the decomposition of prediction error.

Very informally: the total “wrongness” of a predictor is the sum of (at least) three subtypes of “wrongness”:

- Irreducible error. Some prediction tasks are intrinsically more difficult than others. If a system is complex, chaotic, or only partially observed, then we will have a hard time making accurate predictions about it. But if a system is simple, linear, or fully observed, then we will have much less difficulty making accurate predictions. This irreducible error is a property of the prediction task itself—any prediction technique we might choose will be subject to it.

- Bias. When we choose a model for a prediction task, we impose a set of corresponding assumptions—informally, we can call these assumptions biases. For example: if we choose to use linear regression, then we are in effect assuming that our predicted value \(y\) is generated by a linear combination of input variables \(X\): that is, \(y = Xw + b \). If this assumption is false (and it almost always is), then any linear regression that we choose will make errors when we use it for prediction. This bias represents the predictor’s systemic mistakes.

- Variance. Our predictor may have an element of randomness to it; it may make different predictions for the same inputs if we run it multiple times. This isn’t usually a good design for a predictor (unless it operates in an adversarial setting where some unpredictability is useful), so we usually implement fully deterministic predictors. However, even if our predictor is totally deterministic, it will be subject to the randomness in the dataset we fit it to. That is, if we replaced our dataset with a new dataset and re-fit the predictor, then it might make radically different predictions. We use the term variance to refer to the predictor’s randomness. It represents the predictor’s unreliability.

Some interesting phenomena that data scientists observe in practice:

- There is usually a tradeoff between a predictor’s bias and variance. That is: predictors with high bias usually have low variance; and predictors with high variance usually have low bias.

- The parameter which controls this tradeoff is the complexity of the predictor. I.e., simple predictors tend to have high bias/low variance, while complicated predictors usually have low bias/high variance.

- The optimal amount of predictor complexity is usually proportional to the amount of data available for training that predictor. That is: we are better off using simple models when we have little data; and complicated models when we have a lot of data.

Human Fallibility

Most of us go through life with some concept of human fallibility echoing in our heads:

I’m not perfect—I’m only human.

or

Humans make mistakes.

This is with good reason. History is replete with examples of poor judgment. And when we rely on humans—whether it be ourselves or someone else—we are often met with disappointment.

What happens when we view human fallibility through the lens of bias, variance, and irreducible error?

Irreducible Error

Many human mistakes result from fundamental difficulties: the world is complex and mostly unobserved. There are unknown unknowns. We are subjected to certain unavoidable challenges as we navigate this uncertain environment.

One could invoke religious language, and call this kind of error original sin.

A classic concept from Stoic philosophy: we can divide our circumstances into those within our control, and those outside of our control.

Irreducible error stems from the circumstances that are out of our control.

Stoicism makes the useful suggestion that we focus on the things within our control, and not attach emotional significance to the things outside our control.

My pessimism inclines me to think irreducible error is large.

My optimism inclines me to think that humans do pretty well in spite of it, all things considered.

High Bias

A lack of subtlety or nuance. Black and white thinking.

This is—perhaps to a small degree—within our control.

Some worthwhile questions to ask ourselves, in order to understand or address our personal biases:

- Should I trust my reflexive reaction in this situation? Or is deeper deliberation warranted?

- What incorrect assumptions am I taking for granted?

- What nuance am I failing to see in the present situation?

- What bullshit stories am I telling myself?

- Who can I disagree with in a constructive manner, to help correct my own bias?

High Variance

I wager that any human who thinks much about their own behavior will eventually reach the same, puzzling conclusion: I fail to do the things I know I should be doing—even when I claim that I want to do them.

An undisciplined mind. Impulsiveness.

What Aristotle called “incontinence”.

Freudian view: the human mind is composed of conflicting subconscious wills. They may be poorly integrated—behavior will depend on which of these wills happens to be dominant at a given time.

Some worthwhile questions to ask, in order to examine our own “variances”:

- What values or ideals do I hold, consciously or unconsciously?

- Is my behavior consistent with my own values?

- What impulses drive my behavior? Can I improve my control over them?

- What is the smallest, simplest, easiest action I can take to behave more consistently with my values?

\( \blacksquare\)